Premise: Linear Separable.

Large-Margin Separating Hyperplane $\Leftrightarrow$ tolerate more noise $\Leftrightarrow$ robustness.

Distance to Hyperplane

$$h(x)=sign(w^Tx+b)$$

want distance$(x, b w)$, with hyperplane $w^Tx^’+b=0$

We can prove that $w\perp hyperplane$, and thus $distance=project(x-x^’)\ to\ w$.

$distance(x,b,w)=\frac{|w^T(x-x^’)|)}{||w||}=\frac{|w^Tx+b|}{||w||}$

For separating hyperplane: every $n\ y_n(w^Tx_n+b)>0$, then we have:

$\mathbb{max}\limit_{b,w}\qquad margin(b,w)$

$subject\ to\qquad every\ y_n(w^Tx_n+b)>0\quad margin(b,w)=\mathbb{min}\limit_{n=1,…,N}\frac{1}{||w||}y_n(w^Tx_n+b)$

Actually, we can scale $\mathbb{min}\limit_{n=1,…,N}y_n(w^Tx_n+b)$ to 1, and the problem comes to find max $\frac{1}{||w||}$.

$\mathbb{max}\limit_{b,w}\frac{1}{||w||}\ subject\ to\ \mathbb{min}\limit_{n=1,…,N}y_n(w^Tx_n+b)=1$

Finally, we have standard large-margin hyperplane problem:

$\mathbb{min}\limit_{b,w}\qquad \frac{1}{2}w^Tw$

$subject\ to\qquad y_n(w^Tx_n+b)\geq1\ for \ all\ n$

Solving General SVM

Quadratic Programming. (?)

Reasons behind Large-margin Hyperplane

SVM(large-margin hyperplane) can be seen as a ‘weight-decay regularization’ within $E_{in}=0$

large-margin $\Rightarrow$ fewer dichotomies $\Rightarrow$ smaller ‘VC dim.’ $\Rightarrow$ better generalization.

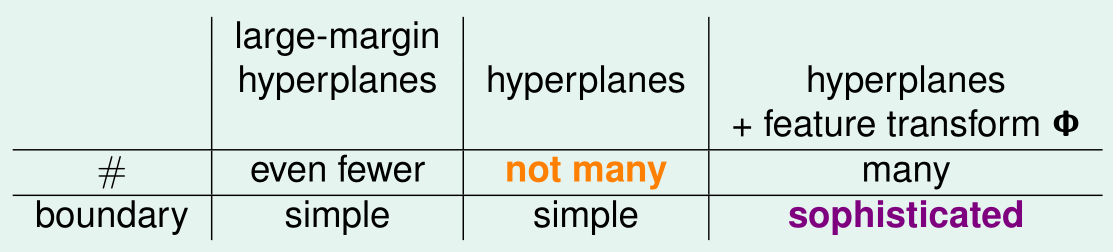

Benefits of Large-Margin Hyperplanes.

- not many good, for $d_{vc}$ and generalization.

- sophisticated good, for possbily better $E_{in}$.

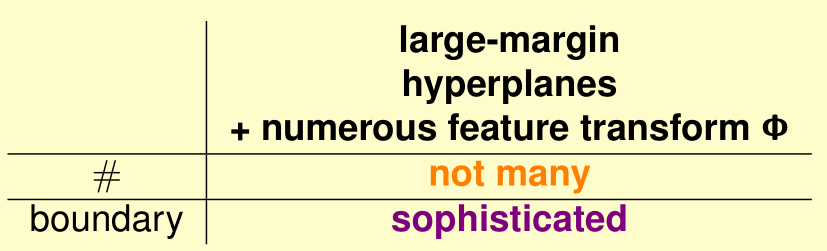

a new possibility: non-linear SVM