Linear Models for Binary Classification

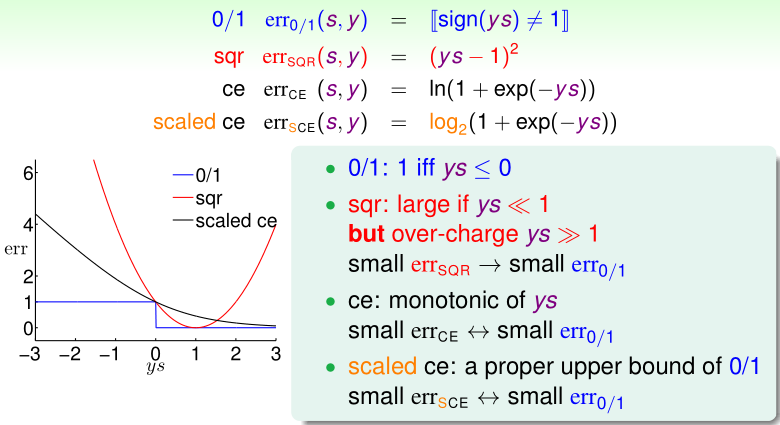

Visualizing Error Functions ($s=w^Tx\ ;\ y\epsilon\{-1,+1\}$)

- linear classification:

$\qquad h(x)=sign(s)\ ;\ err(h,x,y)=[h(x)\neq y]$

$\qquad err_{0/1}(s,y)=[sign(s)\neq y]=[sign(ys)\neq1]$ - linear regresion:

$\qquad h(x)=s\ ;\ err(h,x,y)=(h(x)-y)^2$

$\qquad err_{SQR}(s,y)=(s-y)^2=(ys-1)^2$ - logistic regression:

$\qquad h(x)=\theta(s)\ ;\ err(h,x,y)=-ln\ h(yx)$

$\qquad err_{CE}(s,y)=ln(1+exp(-ys))$

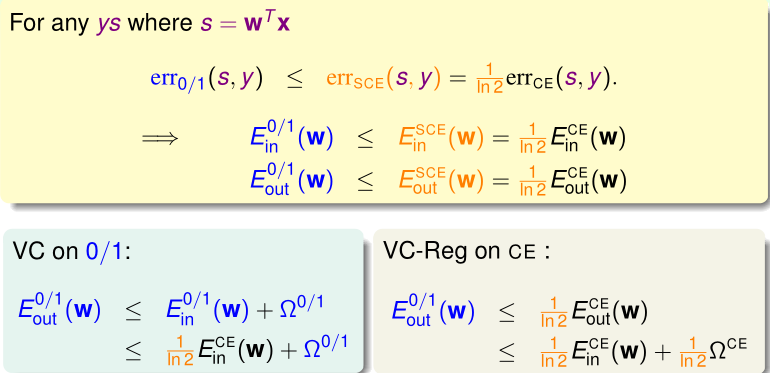

Upper Bound : useful for designing algorithmic error $\hat{err}$

Theoretical Implication of Upper Bound

$small\ E_{in}^{CE}(w)\Rightarrow small\ E_{out}^{0/1}(w)$

logistic / linear reg. for linear classification.

Regression for Classification

run logistic / linear reg. on $D$ with $y_n\epsilon\{-1,+1\}$ to get $w_{REG}$

return $g(x)=sign(w_{REG}^Tx)$

Linear regression sometimes used to set $w_0$ for PLA/pocket/logistic regression

& logistic regression often preferred over pocket.

Stochastic Gradient Descent

PLA 每次迭代只需要选择一个样本,即 $O(1)$ 的时间,而 logistic regression (包括 pocket) 需要检查数据集并通过所有样本来决定 $w_{t+1}$ ,每一次迭代需要 $O(N)$ 的时间.

可以通过随机选取某个样例来更新方向 $v$ ,将这个样例视为多个样例的平均.

pros: simple & cheaper computation —> useful for big data or online learning

cons: less stale in nature

SGD logistic regression $w_{t+1}\leftarrow w_t+\eta \theta(-y_nw_t^Tx_n)(y_nx_n)$

PLA $w_{t+1}\leftarrow w_t+1·[y_n\neq sign(w_t^Tx_n)](y_nx_n)$

- SGD logistic regression $\approx$ ‘soft’ PLA

- PLA $\approx$ SGD logistic regression with $\eta=1$ when $w_t^Tx_n$ large.

two practial rule-of-thumb: stopping condition(t large enough); $\eta$(0.1 when x in proper range)

Multiclass via Logistic Regression

采用OvA策略,即属于 $C_i$ 类别以及不属于 $C_i$ 的类别,这样对于 $N$ 个类别我们有 $N$ 个分类器. 同时为了避免出现分类边界盲区出现,使用 $P(C_i|x)$ 作为基分类器的输出.

$for\ k\epsilon\ Y$

$\qquad obtain\ w_{[k]}\ by\ running\ logistic\ regression\ on$

$\qquad\qquad D_{[k]}=\{(x_n,y_n^{‘}=2[y_n=k]-1)\}_{n=1}^N$

$return \ g(x)=\mathop{argmax}\limits_{k\epsilon Y}(w_{[k]}^Tx)$

pros: efficient, can be coupled with any logistic regression-like approaches

cons: often unbalanced $D_{[k]}$ when $K$ large

extension: multinomial (‘coupled’) logistic regression

Multiclass via Binary Classification

针对OvA中处理 $K$ 较大的情况时效果不太好的情况,提出OvO的策略. 即每一次只考虑两个类别的情况,这样对于 $K$ 个类别,会有 $\binom{K}{2}$ 个分类器.

$for\ (k,l)\ \epsilon\ Y\times Y$

$\qquad\qquad obtain\ w_{[k,l]}\ by\ running\ linear\ binary\ classification\ on$

$\qquad\qquad D_{[k,l]}=\{(x_n,y_n^{‘}=2[y_n=k]-1):y_n=k\ or\ y_n=l\}$

$return\ g(x)=tournament\ champion\ \{w_{[k,l]}^Tx\}$

pros: efficient (‘smaller’ training problems), stable, can be coupled with any binary classification approached

cons: use $O(K^2)\ w_{[k,l]}$ —> more space, slower prediction, more training